HDR Insights Series Article 4 : Dolby Vision

In the previous article, we discussed the HDR tone mapping and how it is used to produce an optimum viewer experience on a range of display devices. This article discusses the basics of Dolby Vision meta-data and the parameters that the user needs to validate before the content is delivered.

What is HDR metadata?

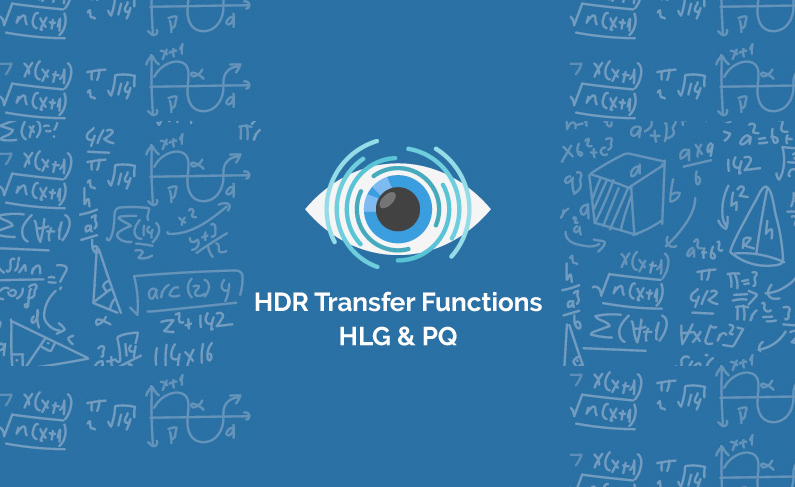

HDR Metadata is an aid for a display device to show the content in an optimal manner. It contains the HDR content and mastering device properties that are used by the display device to map the content according to its own color gamut and peak brightness. There are two types of metadata – Static and Dynamic.

Static metadata

Static metadata contains metadata information that is applicable to the entire content. It is standardized by SMPTE ST 2086. Key items of static metadata are as following:

- Mastering display properties: Properties defining the device on which content was mastered.

- RGB color primaries

- White point

- Brightness Range

- Maximum content light level (MaxCLL): Light level of the brightest pixel in the entire video stream.

- Maximum Frame-Average Light Level (MaxFALL): Average Light level of the brightest frame in the entire video stream.

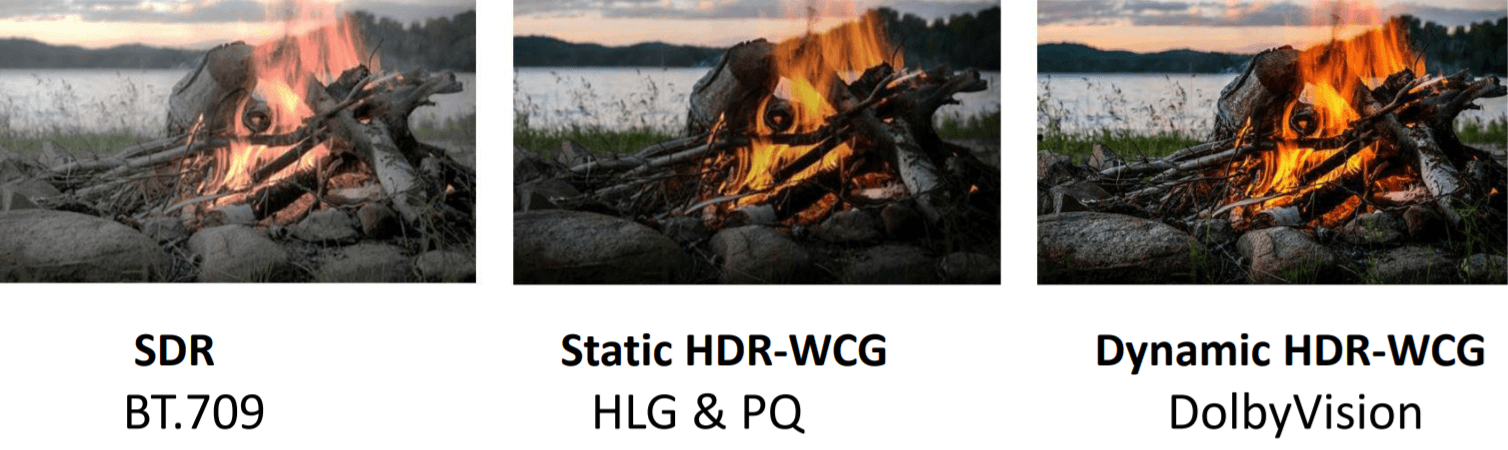

In a typical content, the brightness and color range varies from shot to shot. The challenge with static metadata is that if the tone mapping is performed based on the static metadata, it will be based only on the brightest frame in the entire content. As a result, the majority of the content will have greater compression of dynamic range and color gamut than needed. This will lead to poor viewing experience on less capable HDR display devices.

Dynamic metadata

Dynamic metadata allows the tone mapping to be performed on a per scene basis. This leads to a significantly better user viewing experience when the content is displayed on less capable HDR display devices. Dynamic metadata has been standardized by SMPTE ST 2094, which defines content-dependent metadata. Using Dynamic metadata along with Static metadata overcomes the issues presented by the usage of only the static metadata for tone mapping.

Dolby Vision

Dolby Vision uses dynamic metadata and is in fact the most commonly used HDR technology today. This is adopted by major OTT service providers such as Netflix and Amazon, as well as major studios and a host of prominent television manufacturers. Dolby Vision is standardized in SMPTE ST 2094-10. In addition to supporting for dynamic metadata, Dolby Vision also allows description of multiple trims for specific devices which allows finer display on such devices.

Dolby has documented the details of its algorithm in what they refer to as Content Mapping (CM) documents. The original CM algorithm is version (CMv2.9) which has been used since the introduction of Dolby Vision. Dolby introduced the Dolby Vision Content Mapping version 4 (CMv4) in the fall of 2018. Both versions of the CM are still in use. The Dolby Vision Color Grading Best Practices Guide provides more information.

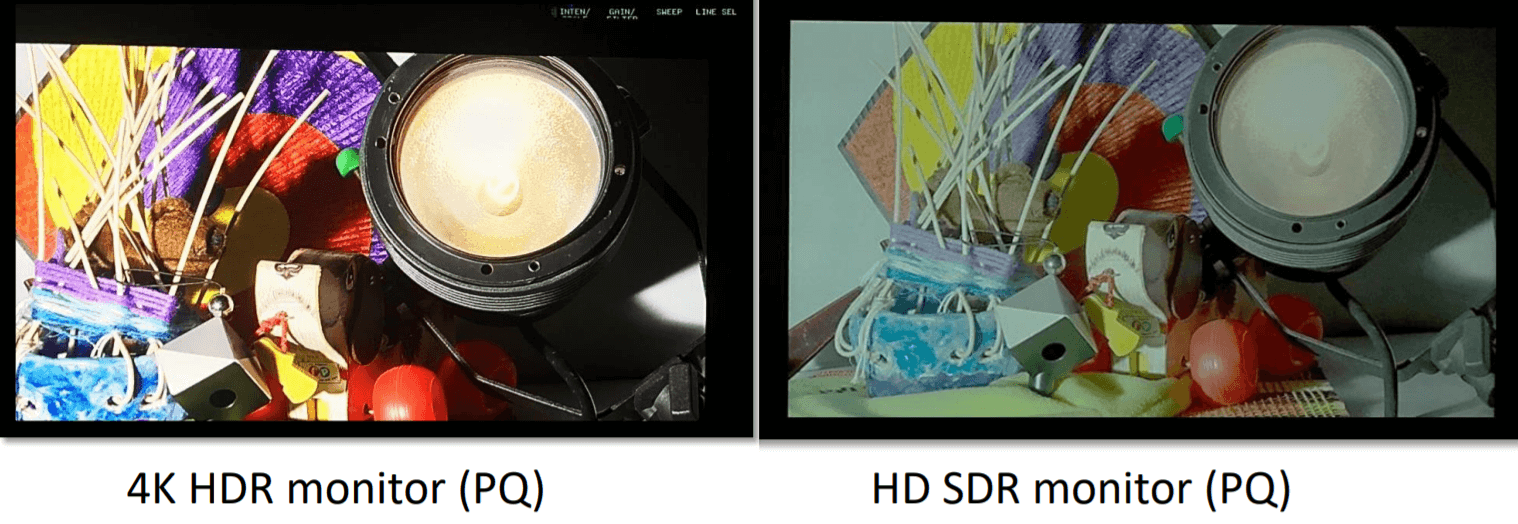

Dolby Vision metadata is coded at various ‘levels’, the description of which is mentioned below:

| Metadata Level/Field | Description |

| LEVEL 0 | GLOBAL METADATA (STATIC) |

| Mastering Display | Describes the characteristics of the mastering display used for the project |

| Aspect Ratio | Ratio of canvas and image (active area) |

| Frame Rate | Frame Rate |

| Target Display | Describes the characteristics of each target display used for L2 trim metadata |

| Color Encoding | Describes the image container deliverable |

| Algorithm/Trim Version | CM algorithm version and Trim version |

| LEVEL 1 | ANALYSIS METADATA (DYNAMIC) |

| L1 Min, Mid, Max | Three floating point values that characterize the dynamic range of the shot or frame

Shot-based L1 metadata is created by analyzing each frame contained in a shot in LMS color space and combined to describe the entire shot as L1Min, L1Mid, L1Max Stored as LMS (CMv2.9) and L3 Offsets |

| LEVEL 2 | BACKWARDS COMPATIBLE PER-TARGET TRIM METADATA (DYNAMIC) |

| Reserved1, Reserved2, Reserved3, Lift, Gain, Gamma, Saturation, Chroma and Tone Detail | Automatically computed from L1, L3 and L8 (lift, gain, gamma, saturation, chroma, tone detail) metadata for backwards compatibility with CMv2.9 |

| LEVEL 3 | OFFSETS TO L1 (DYNAMIC) |

| L1 Min, Mid, Max | Three floating point values that are offsets to L1 Analysis metadata as L3Min, L3Mid, L3Max

L3Mid is a global user defined trim control L1 is stored as CMv2.9 computed values, CMv4 reconstructs RGB values with L1 + L3 |

| LEVEL 5 | PER-SHOT ASPECT RATIO (DYNAMIC) |

| Canvas, Image | Used for defining shots that have different aspect ratios than the global L0 aspect ratio |

| LEVEL 6 | OPTIONAL HDR10 METADATA (STATIC) |

| MaxFALL, MaxCLL | Metadata for HDR10

MaxCLL – Maximum Content Light Level MaxFALL – Maximum Frame Average Light Level |

| LEVEL 8 | PER-TARGET TRIM METADATA (DYNAMIC) |

| Lift, Gain, Gamma, Saturation, Chroma, Tone Detail, Mid Contrast Bias, Highlight Clipping

6-vector (R,Y,G,C,B,M) saturation and 6-vector (R,Y,G,C,B,M) hue trims |

User defined image controls to adjust the CMv4 algorithms per target with secondary color controls |

| LEVEL 9 | PER-SHOT SOURCE CONTENT PRIMARIES (DYNAMIC) |

| Rxy, Gxy, Bxy, WPxy | Stores the mastering display color primaries and white point as per-shot metadata |

Dolby Vision QC requirements

Netflix, Amazon, and other streaming services are continuously adding more and more HDR titles to their library with the aim of improving the quality of experience for their viewers and differentiating their service offerings. This requires that the content suppliers are equipped to deliver good quality and compliant HDR content. Moreover, having the ability to verify quality before delivery becomes more important.

Many of these OTT services support both the HDR-10 and Dolby Vision flavors of HDR. However, more and more Netflix HDR titles are now based on Dolby Vision. Dolby Vision is a new and complex technology, and therefore checking the content for correctness and compliance is not always easy. Delivering non-compliant HDR content can affect your business and therefore using a QC tool to assist in HDR QC can go a long way in maintaining a good standing with these OTT services.

Here are some of the important aspects to verify for HDR-10 and Dolby Vision:

- HDR metadata presence

- HDR-10: Static metadata must be coded with the correct parameter values.

- Dolby Vision: Static metadata must be present once and dynamic metadata must be present for every shot in the content.

- HDR metadata correctness. There are a number of issues that content providers need to check for correctness in the metadata:

- Only one mastering display should be referenced in metadata.

- Correct mastering display properties – RGB primaries, white point and Luminance range.

- MaxFALL and MaxCLL values.

- All target displays must have unique IDs.

- Correct algorithm version. Dolby supports two versions:

- Metadata Version 2.0.5 XML for CMv2.9

- Metadata Version 4.0.2 XML for CMv4

- No frame gaps. All the shots, as well as frames, must be tightly aligned within the timeline and there should not be any gap between frames and/or shots

- No overlapping shots. The timeline must be accurately cut into individual shots; and analysis to generate L1 metadata should be performed on a per-shot basis. If the timeline is not accurately cut into shots, there will be issues with luminance consistency and may lead to flashing and flickering artifacts during playback.

- No negative duration for shots. Shot duration, as coded in “Duration” field, must not be negative

- Single trim for a particular target display. There should be one and only one trim for a target display.

- Level 1 metadata must be present for all the shots.

- Valid Canvas and Image aspect ratio. Cross check the canvas and image aspect ratio with the baseband level verification of the actual content.

- Validation of video essence properties. Essential properties such as Color matrix, Color primaries, Transfer characteristics, bit depth etc. must be correctly coded.

Netflix requires the Dolby Vision metadata to be embedded in the video stream for the content delivered to them. Reviewing the embedded meta-data in video stream can be tedious and therefore an easy way to extract & review the entire metadata may be needed and advantageous.

How can we help?

Venera’s QC products (Pulsar – for on-premise & Quasar – for cloud) can help in identifying these issues in an automated manner. We have worked extensively with various technology and media groups to create features that can help the users with their validation needs. And we have done so without introducing a lot of complexity for the users.

Depending on the volume of your content, you could consider one of our Perpetual license editions (Pulsar Professional, or Pulsar Standard), or for low volume customers, we also have a very unique option called Pulsar Pay-Per-Use (Pulsar PPU) as an on-premise usage-based QC software where you pay a nominal per minute charge for content that is analyzed. And we, of course, offer a free trial so you can test our software at no cost to you. You can also download a copy of the Pulsar brochure here. And for more details on our pricing you can check here.

If your content workflow is in the cloud, then you can use our Quasar QC service, which is the only Native Cloud QC service in the market. With advanced features like usage-based pricing, dynamic scaling, regional resourcing, content security framework and REST API, the platform is a good fit for content workflows requiring quality assurance. Quasar is currently supported for AWS, Azure and Google cloud platforms and can also work with content stored on Backblaze B2 cloud storage. Read more about Quasar here.

Both Pulsar & Quasar come with a long list of ‘ready to use’ QC templates for Netflix, based on their latest published specifications (as well as some of the other popular platforms, like iTunes, CableLabs, and DPP) which can help you run QC jobs right out of the box. You can also enhance and modify any of these QC templates or build new ones! And we are happy to build new QC templates for your specific needs.